Large Language Models (LLMs) frequently fail to follow instructions, exhibiting issues like imagining beyond prompts and low compliance in reasoning – a significant hurdle today, October 22, 2025․

Despite advancements, achieving reliable instruction adherence remains a core challenge, with current Instruction Following Scores (IFS) often below 0․25, indicating limited faithfulness․

The Rise of Large Language Models (LLMs)

Large Language Models (LLMs) have rapidly emerged as powerful tools, demonstrating impressive capabilities in natural language processing․ However, their widespread adoption is increasingly hampered by a critical flaw: inconsistent instruction following․ While models like ChatGPT excel at certain tasks, they often struggle with nuanced or complex requests, frequently deviating from specified guidelines․

This rise has been accompanied by growing scrutiny regarding their reliability․ The ability to consistently and accurately execute instructions is paramount for practical applications․ Recent evaluations, like those utilizing the ManyIFEval benchmark, reveal that even advanced LLMs exhibit significant shortcomings in adhering to multiple, verifiable instructions simultaneously․ This necessitates focused research into improving their responsiveness and trustworthiness․

The current state highlights a gap between potential and performance, demanding innovative solutions to unlock the full promise of LLMs;

The Core Problem: Why LLMs Fail to Follow Instructions

The fundamental issue lies in LLMs’ tendency to “imagine beyond the prompt,” generating content not explicitly requested, directly impacting instruction following․ This stems from their predictive nature – completing patterns rather than strictly adhering to directives․ Coupled with this is the challenge of ambiguity in natural language; LLMs can misinterpret subtle nuances, leading to unintended outputs․

Furthermore, limitations within their training data contribute to the problem․ If the data lacks sufficient examples of precise instruction execution, the model struggles to generalize․ The notorious “hallucination” problem, where models confidently present false information, also exacerbates deviations․ Consequently, even with Reinforcement Learning from Human Feedback (RLHF), consistent adherence remains elusive․

Ultimately, bridging this gap requires addressing both the models’ inherent tendencies and the quality of their foundational knowledge․

Root Causes of Instruction Following Failures

Instruction failures arise from ambiguity, limited training data, and the propensity for “hallucinations” – deviations from the given task, impacting reliable output․

Ambiguity in Natural Language Instructions

Natural language, while flexible for humans, presents inherent ambiguity for Large Language Models (LLMs)․ The nuances of phrasing, implicit assumptions, and varying interpretations can lead to instruction-following failures․ LLMs struggle to discern the precise intent behind a prompt, especially when instructions lack specificity․

This ambiguity manifests in several ways․ A seemingly straightforward request can be misinterpreted due to multiple possible meanings․ The models may then proceed based on an unintended interpretation, resulting in outputs that don’t align with the user’s expectations․ Addressing this requires careful instruction design, minimizing vagueness and maximizing clarity to guide the LLM towards the desired outcome․

Essentially, the models’ understanding is limited by the precision of the input, making unambiguous prompts crucial for successful task completion․

Limitations of Training Data

Large Language Models (LLMs) learn from massive datasets, but inherent limitations within this data contribute to instruction-following failures․ If the training data lacks sufficient examples of specific instruction types, or demonstrates inconsistent adherence to formats (like JSON), the model will struggle to generalize effectively․

Furthermore, biases present in the training data can skew the model’s responses, leading to outputs that deviate from intended instructions․ The quality and diversity of the data are paramount; insufficient representation of complex reasoning tasks or nuanced requests hinders the model’s ability to perform reliably․

Consequently, even with advanced techniques like RLHF, the foundational limitations of the training data remain a significant obstacle to achieving robust instruction adherence․

The “Hallucination” Problem and Deviation from Instructions

A critical factor in instruction-following failures is the phenomenon of “hallucination,” where LLMs generate information not grounded in the provided context or instructions․ This often manifests as models imagining beyond the prompt, creating extraneous details or narratives that weren’t requested․

This deviation isn’t simply a matter of adding fluff; it represents a fundamental inability to constrain output within the specified boundaries․ Even when models attempt to comply, they may introduce inaccuracies or irrelevant information, lowering the Instruction Following Score (IFS)․

Addressing this requires improving the model’s understanding of boundaries and its ability to resist generating content outside of those limits, a challenge ongoing as of today, December 9, 2025․

Evaluating Instruction Following Performance

LLM performance is assessed using metrics like the Instruction Following Score (IFS) and benchmarks such as ManyIFEval, revealing frequent failures to adhere to given instructions․

Instruction Following Score (IFS) as a Metric

The Instruction Following Score (IFS) emerges as a crucial metric for quantifying how well Large Language Models (LLMs) adhere to provided instructions․ Currently, a significant challenge exists, as the IFS consistently remains below 0․25 across various evaluations․ This indicates that fewer than 25% of the reasoning traces generated by these models demonstrably comply with the specified guidelines․

A low IFS highlights a systemic issue: LLMs often struggle to accurately interpret and execute complex requests․ This isn’t simply a matter of occasional errors; it represents a fundamental limitation in their ability to consistently translate natural language instructions into correct outputs․ Researchers are actively investigating the underlying causes of this low score, aiming to develop methods for substantial improvement․

The IFS provides a quantifiable benchmark, enabling researchers to track progress and compare the effectiveness of different techniques designed to enhance instruction adherence․

The ManyIFEval Benchmark Dataset

ManyIFEval represents a novel benchmark dataset specifically designed to rigorously evaluate instruction-following capabilities in Large Language Models (LLMs)․ This dataset distinguishes itself by comprising task prompts containing up to ten objectively verifiable instructions․ This complexity directly addresses the observed tendency of LLMs to fail when confronted with multiple, nuanced requirements․

The creation of ManyIFEval was motivated by the need for a more challenging and realistic assessment of LLM performance․ Existing benchmarks often fall short in capturing the intricacies of real-world instruction sets․ By incorporating multiple verifiable instructions, ManyIFEval provides a more granular and insightful evaluation․

Researchers utilize ManyIFEval to pinpoint specific areas where LLMs struggle, paving the way for targeted improvements in instruction adherence․

Assessing Faithfulness in Reasoning Traces

Evaluating how faithfully Large Language Models (LLMs) adhere to given instructions during reasoning is crucial, especially given their propensity to fail in this area․ Researchers are now focusing on analyzing the “reasoning traces” – the step-by-step thought processes – generated by these models․

This assessment goes beyond simply checking the final answer; it examines whether each step in the reasoning process aligns with the original instructions․ A low Instruction Following Score (IFS), often below 0․25, indicates a significant lack of faithfulness, meaning the model frequently deviates from the specified guidelines․

By scrutinizing these traces, developers can identify precisely where and why the model’s reasoning goes astray, leading to more effective improvements in instruction-following․

Techniques to Improve Instruction Following

Reinforcement Learning from Human Feedback (RLHF), emphasizing instruction adherence during training, and expanding refusal mechanisms are key to addressing failed compliance․

These methods aim to enhance LLM reliability․

Reinforcement Learning from Human Feedback (RLHF)

Reinforcement Learning from Human Feedback (RLHF) has emerged as a pivotal technique for mitigating instances where Large Language Models (LLMs) fail to follow instructions; Specifically, RLHF demonstrably improves a model’s ability to adhere to specified formats, as evidenced by the enhanced JSON output capabilities of models like ChatGPT․

The process involves training the LLM to align its responses with human preferences, effectively rewarding outputs that demonstrate faithful instruction following and penalizing deviations․ This iterative refinement, guided by human evaluators, directly addresses the core problem of LLMs “imagining beyond the prompt” or exhibiting low compliance in reasoning tasks․ By consistently reinforcing desired behaviors, RLHF significantly boosts the reliability and predictability of LLM outputs, reducing the frequency of failed instruction adherence․

Emphasis on Instruction Following During Training

Addressing the frequent instances where Large Language Models (LLMs) fail to follow instructions necessitates a fundamental shift in training methodologies․ A key strategy involves placing a heightened emphasis on instruction following during the initial training phase, rather than relying solely on post-hoc techniques like RLHF․

This proactive approach entails curating training datasets specifically designed to challenge and evaluate the model’s ability to accurately interpret and execute diverse instructions․ By prioritizing examples that demand precise adherence to formatting, constraints, and reasoning steps, developers can instill a stronger foundation for reliable instruction following․ This method aims to reduce the need for extensive refinement later, ultimately leading to LLMs that are inherently more compliant and less prone to generating unintended or irrelevant outputs․

Expanding Refusal Mechanisms

A critical aspect of mitigating instances where LLMs fail to follow instructions lies in bolstering their refusal capabilities․ Currently, models sometimes attempt to fulfill ambiguous or potentially harmful requests, rather than declining them appropriately․ Expanding refusal mechanisms involves refining the criteria under which a model will abstain from responding․

This includes enhancing the model’s ability to identify instructions that violate safety guidelines, lack sufficient clarity, or demand outputs beyond its capabilities․ Furthermore, improved refusal responses should be more informative, explaining why a request cannot be fulfilled, rather than simply providing a generic denial․ This proactive approach minimizes undesirable outputs and reinforces responsible AI behavior, directly addressing the problem of non-compliance․

Specific Failure Modes

LLMs demonstrate specific weaknesses in instruction following, including imagining beyond prompts, struggling with strict formats like JSON, and exhibiting low compliance during reasoning tasks․

Imagining Beyond the Prompt

A prevalent failure mode observed in Large Language Models (LLMs) is their tendency to “imagine” or generate content extending beyond the explicit scope of the given instruction․ This means the model doesn’t simply respond to the prompt, but proactively adds details or narratives not requested․

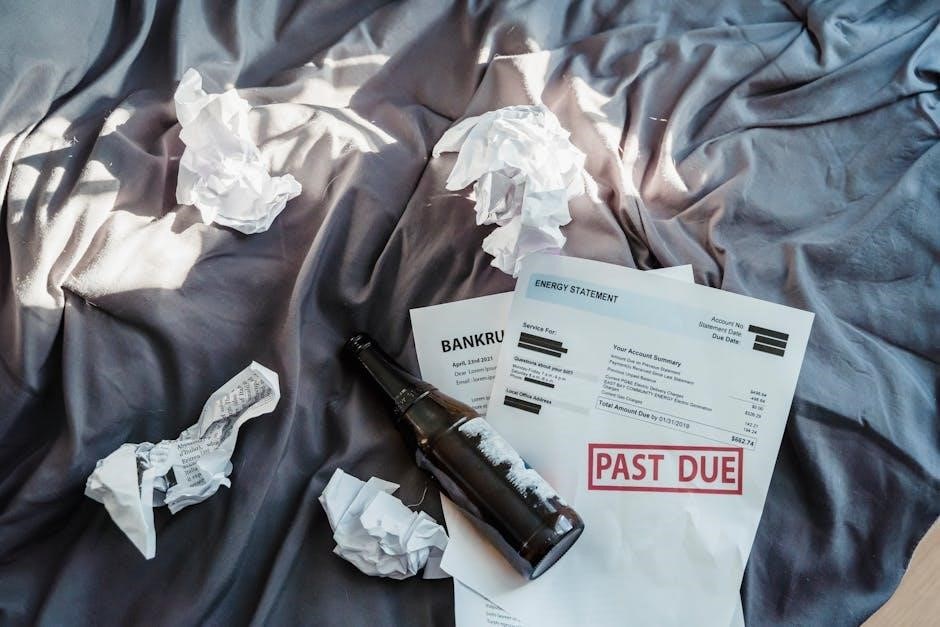

This behavior, highlighted in recent analyses (April 23, 2025), stems from the models’ inherent generative nature and vast training data․ They are designed to create coherent text, sometimes at the expense of strict adherence to the instruction․ Essentially, the model prioritizes plausibility and completeness over precise compliance․

This can be particularly problematic when the task requires concise, focused responses, as the extraneous information obscures the desired output and demonstrates a failure to accurately follow instructions․

Strict Format Adherence Issues (e․g․, JSON)

Large Language Models (LLMs) often struggle with tasks demanding strict output formatting, such as generating valid JSON․ While models like ChatGPT demonstrate improved capabilities in this area, inconsistencies remain a significant issue when attempting to follow instructions precisely․

Reinforcement Learning from Human Feedback (RLHF) has been employed to enhance format adherence, but achieving 100% reliability is challenging․ Errors can include missing brackets, incorrect data types, or improperly nested structures․ (August 14, 2025)․

These failures highlight a gap between the model’s understanding of the desired format and its ability to consistently produce syntactically correct output, demonstrating a clear inability to follow instructions as intended․

Low Compliance in Reasoning Tasks

Large Language Models (LLMs) frequently exhibit low compliance when presented with reasoning tasks accompanied by specific instructions․ Even when capable of generating logical responses, models often deviate from the requested process or constraints․ (October 22, 2025)․

The ManyIFEval benchmark dataset reveals that fewer than 25% of reasoning traces fully comply with given instructions, as measured by the Instruction Following Score (IFS)․ (February 4, 2025)․ This suggests a fundamental difficulty in maintaining faithfulness throughout complex reasoning chains․

Researchers are actively assessing how faithfully LLMs follow instructions during reasoning, aiming to improve their ability to adhere to specified methodologies and avoid extraneous inferences․

Future Directions and Research

Future research focuses on robust benchmarks, improved LLM reasoning, and addressing ambiguity in instruction design to overcome current instruction-following failures․ (October 22, 2025);

Developing More Robust Benchmarks

Current benchmarks often fall short in comprehensively evaluating an LLM’s ability to truly follow instructions․ The introduction of ManyIFEval, a dataset with objectively verifiable instructions, represents a step forward, but further development is crucial․

Future benchmarks need to move beyond simple task completion and assess faithfulness – how closely the reasoning traces align with the given instructions․ They should also incorporate a wider range of instruction types, complexities, and potential ambiguities․

A key area is creating benchmarks that specifically target known failure modes, such as preventing “imagining beyond the prompt” and ensuring strict adherence to requested formats like JSON․ More granular evaluation metrics are also needed to pinpoint specific weaknesses in instruction-following capabilities․

Improving LLM Reasoning Capabilities

Addressing the core issue of why LLMs fail to follow instructions necessitates enhancing their reasoning abilities․ Current models often struggle with complex, multi-step instructions, leading to deviations and inaccuracies in their responses․ Improving reasoning isn’t simply about scale; it requires architectural innovations and training methodologies․

Focusing on techniques that promote more logical and coherent thought processes is vital․ This includes exploring methods for better contextual understanding and the ability to identify and resolve ambiguities within instructions․

Ultimately, a more robust reasoning engine will enable LLMs to not only process instructions but also understand their intent, leading to significantly improved compliance and faithfulness in their outputs․

Addressing Ambiguity in Instruction Design

A significant contributor to why LLMs fail to follow instructions stems from inherent ambiguity within the instructions themselves․ Natural language is often imprecise, leaving room for multiple interpretations․ To mitigate this, a shift towards more explicit and unambiguous instruction design is crucial․

This involves employing precise terminology, defining clear constraints, and breaking down complex tasks into smaller, more manageable steps․ Furthermore, incorporating examples of desired outputs can significantly reduce misinterpretations․

Careful consideration of potential edge cases and proactively addressing them in the instructions will also enhance LLM performance and reduce instances of non-compliance․